Do you know what cache memory is and what the purpose of it is? If you don’t know and want to find the answers, you can refer to this post. Besides, you can learn about the different types of cache memory. Now, keep on your reading.

Cache Memory

Definition

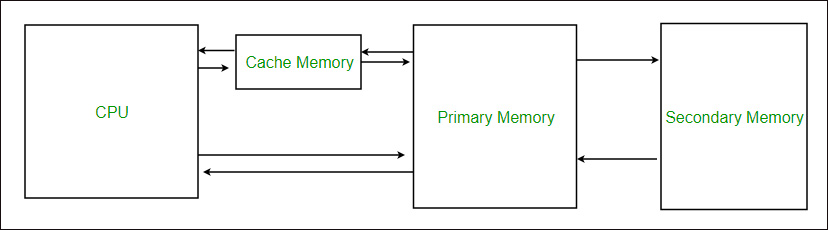

What is cache memory? Cache memory is a chip-based computer component. It can make the data be retrieved from the computer’s memory more efficiently. It acts as a temporary storage area where computer processors can easily retrieve data and it can act as a buffer between RAM and CPU.

What is the purpose of cache memory? It can be used to speed up and synchronize with high-speed CPU. It saves frequently requested data and instructions so that they can be used to CPU immediately when needed. Cache memory is more expensive than main memory or disk memory, but less expensive than CPU registers.

Types

Traditionally, cache memory type was classified as “level” to describe its proximity and accessibility to the microprocessor. The levels of cache memory are as follows:

Level 1: Level 1 cache is the primary cache, which is very fast, but relatively small. It is usually embedded as a CPU cache in the processor chip.

Level 2: Level 2 cache is the secondary cache, which is usually larger than level 1 cache. L2 cache can be embedded in the CPU, or it can be in a separate chip or coprocessor and it has a high-speed standby system bus that connects the cache and the CPU.

Level 3: Level 3 cache is the specialized memory, which aims to improve the performance of level 1 and level 2. Although the L3 cache is usually twice the speed of DRAM, L1 or L2 cache may be much faster than the L3 cache. With multi-core processors, each core can have dedicated L1 and L2 caches, but they can share L3 caches.

In the past, L1, L2, and L3 caches were created using a combination of processor and motherboard components. Now, the trend is to integrate all three levels of memory cache into the CPU itself. Maybe, you are interested in this post – [2020 Guide] How to Choose a Motherboard for Your PC.

Mapping

The three mapping types used for cache memory are as follows: direct mapping, associative mapping, and set-associative mapping. The details are as follow:

Direct mapping: The simplest technique is direct mapping. It maps each block of main memory to only one possible cache line. Or, in direct mapping, allocate each memory block to a specific line in the cache.

If a storage block previously occupied a row when a new block needs to be loaded, the old block will be discarded. The address space is divided into two parts: the index field and the label field.

Associative mapping: In this type of mapping, associative memory is used to store the contents and addresses of memory words. Any block can enter any line of the cache. This means that the word id bit is used to identify which word is needed in the block, but the label becomes all the remaining bits.

This makes it possible to place any word anywhere in the cache. It is considered as the fastest and most flexible form of mapping.

Set-associative mapping: This mapping form is an enhanced form of direct mapping, which eliminates the disadvantages of direct mapping. Set association solves the problem of possible jitter in direct mapping methods.

It does this by saying that instead of having exactly one line, a block can be mapped in the cache, we will create a group of several rows together to execute this set. A block in memory can then be mapped to any row of a particular collection.

Performance

It first checks for a corresponding entry in the cache when the processor needs to read or write to a location in the main memory. Cache memory performance is usually measured in an amount called the hit ratio. You can use larger cache block sizes, higher associativity, and reduced miss rates. Improve cache performance by reducing the cost of misses and reducing the time to hit the cache.

Also see: How to Clear System Cache Windows 10 [2020 Updated]

Final Words

To conclude, this post introduces some information about cache memory. You have known the definition, types as well as the propose of it. Besides, you can also know cache memory performance and mapping from this post.